The PostGIS development team is pleased to provide bug fix releases for PostGIS 3.0 - 3.6. These are the End-Of-Life (EOL) releases for PostGIS 3.0.12 and 3.1.13. If you haven’t already upgraded from 3.0 or 3.1 series, you should do so soon.

February 09, 2026

PostGIS Development

December 28, 2025

Boston GIS (Regina Obe, Leo Hsu)

FOSS4GNA 2025: Summary

Free and Open Source for Geospatial North America (FOSS4GNA) 2025 was running November 3-5th 2025 and I think it was one of the better FOSS4GNAs we've had. I was on the programming and workshop committees and we were worried with the government shutdown that things could go badly since we started getting people withdrawing their talks and workshops very close to curtain time. Despite our attendance being lower than prior years, it felt crowded enough and on the bright side, people weren't fighting for chairs to sit even in the most crowded talks. The FOSS4G 2025 International happened 2 weeks after, in Auckland, New Zealand, and that I heard had a fairly decent turn-out too.

Continue reading "FOSS4GNA 2025: Summary"by Regina Obe (nospam@example.com) at December 28, 2025 11:37 PM

December 09, 2025

Crunchy Data (Snowflake)

PostGIS Performance: Simplification

There’s nothing simple about simplification! It is very common to want to slim down the size of geometries, and there are lots of different approaches to the problem.

We will explore different methods starting with ST_Letters for this rendering of the letter “a”.

SELECT ST_Letters('a');

This is a good starting point, but to show the different effects of different algorithms on things like redundant linear points, we need a shape with more vertices along the straights, and fewer along the curves.

SELECT ST_RemoveRepeatedPoints(ST_Segmentize(ST_Letters('a'), 1), 1);

Here we add in vertices every one meter with ST_Segmentize and ST_RemoveRepeatedPoints to thin out the points along the curves. Already we are simplifying!

Lets apply the same “remove repeated” algorithm, with a 10 meter tolerance.

WITH a AS (

SELECT ST_RemoveRepeatedPoints(ST_Segmentize(ST_Letters('a'), 1), 1) AS a

)

SELECT ST_RemoveRepeatedPoints(a, 10) FROM a;

We do have a lot fewer points, and the constant angle curves are well preserved, but some straight lines are no longer legible as such, and there are redundant vertices in the vertical straight lines.

The ST_Simplify function applies the Douglas-Peuker line simplification algorithm to the rings of the polygon. Because it is a line simplifier it does a cruder job preserving some aspects of the polygon area like squareness of the top ligature.

WITH a AS (

SELECT ST_RemoveRepeatedPoints(ST_Segmentize(ST_Letters('a'), 1), 1) AS a

)

SELECT ST_Simplify(a, 1) FROM a;

The ST_SimplifyVW function applies the Visvalingam–Whyatt algorithm to the rings of the polygon. Visvalingam–Whyatt is better for preserving the shapes of polygons than Douglas-Peuker, but the differences are subtle.

WITH a AS (

SELECT ST_RemoveRepeatedPoints(ST_Segmentize(ST_Letters('a'), 1), 1) AS a

)

SELECT ST_SimplifyVW(a, 5) FROM a;

Coercing a shape onto a fixed precision grid is another form of simplification, sometimes used to force the edges of adjacent objects to line up exactly. The original such function, ST_SnapToGrid, does exactly what it says on the name. Every vertex is rounded to a fixed grid point.

WITH a AS (

SELECT ST_RemoveRepeatedPoints(ST_Segmentize(ST_Letters('a'), 1), 1) AS a

)

SELECT ST_SnapToGrid(a, 5) FROM a;

However, as you can see at the top left, the grid snapper frequently generates invalidity in polygons, such as the self-intersecting ring in this example.

A more modern alternative is precision reduction.

WITH a AS (

SELECT ST_RemoveRepeatedPoints(ST_Segmentize(ST_Letters('a'), 1), 1) AS a

)

SELECT ST_ReducePrecision(a, 5) FROM a;

The ST_ReducePrecision function not only snaps geometries to a fixed precision grid, it also ensures that outputs are always valid.

Because grid snapping tends to introduce a lot of vertices along straight edges, combining it with a line simplifier makes a lot of sense.

WITH a AS (

SELECT ST_RemoveRepeatedPoints(ST_Segmentize(ST_Letters('a'), 1), 1) AS a

)

SELECT ST_Simplify(ST_ReducePrecision(a, 5),1) FROM a;

Simplifying single geometries is all well and good, but what about simplifying groups of geometries? Specifically ones that share boundaries?

Fortunately, since PostGIS 3.6 there is now a complete set of functions for that problem.

Starting with a pair of polygons with a non-matched shared boundary.

Non-clean boundaries can be cleaned up with the ST_CoverageClean function.

SELECT ST_CoverageClean OVER() AS geom FROM polys;

And once the coverage is clean, the shapes including their shared borders can be simplified with ST_CoverageSimplify.

WITH clean AS (

SELECT ST_CoverageClean OVER() AS geom FROM polys

)

SELECT ST_CoverageSimplify(geom, 10) OVER() FROM clean

by Paul Ramsey (Paul.Ramsey@crunchydata.com) at December 09, 2025 01:00 PM

November 21, 2025

Crunchy Data (Snowflake)

PostGIS Performance: Data Sampling

One of the temptations database users face, when presented with a huge table of interesting data, is to run queries that interrogate every record. Got a billion measurements? What’s the average of that?!

One way to find out is to just calculate the average.

SELECT avg(value) FROM mytable;

For a billion records, that could take a while!

Fortunately, the “Law of Large Numbers” is here to bail us out, stating that the average of a sample approaches the average of the population, as the sample size grows. And amazingly, the sample does not even have to be particularly large to be quite close.

Here’s a table of 10M values, randomly generated from a normal distribution. We know the average is zero. What will a sample of 10K values tell us it is?

CREATE TABLE normal AS

SELECT random_normal(0,1) AS values

FROM generate_series(1,10000000);

We can take a sample using a sort, or using the random() function, but both of those techniques first scan the whole table, which is exactly what we want to avoid.

Instead, we can use the PostgreSQL TABLESAMPLE feature, to get a quick sample of the pages in the table and an estimate of the average.

SELECT avg(values)

FROM normal TABLESAMPLE SYSTEM (1);

I get an answer – 0.0031, very close to the population average – and it takes just 43 milliseconds.

Can this work with spatial? For the right data, it can. Imagine you had a table that had one point in it for every person in Canada (36 million of them) and you wanted to find out how many people lived in Toronto (or this red circle around Toronto).

SELECT count(*)

FROM census_people

JOIN yyz

ON ST_Intersects(yyz.geom, census_people.geom);

The answer is 5,010,266, and it takes 7.2 seconds to return. What if we take a 10% sample?

SELECT count(*)

FROM census_people TABLESAMPLE SYSTEM (10)

JOIN yyz

ON ST_Intersects(yyz.geom, census_people.geom);

The sample is 10%, and the answer comes back as 508,292 (near one tenth of our actual measurement) in 2.2 seconds. What about a 1% sample?

SELECT count(*)

FROM census_people TABLESAMPLE SYSTEM (1)

JOIN yyz

ON ST_Intersects(yyz.geom, census_people.geom);

The sample is 1%, and the answer comes back as 50,379 (near one hundredth of our actual measurement) in 0.2 seconds. Still a good estimate!

Is this black magic? No, the TABLESAMPLE SYSTEM mode gets its speed by reading pages randomly. In our last example, it randomly chose 1% of the pages. Here’s what that looks like in Toronto.

See in particular how blotchy the data are in the suburban areas outside the circle. The data in the table are not randomly distributed to the pages, they came from the census data in order, and ended up loaded into the database in order. So for any given database page, the actual rows in the page will tend to be near to one another.

This works for this example because the amount of data is high, and the area we are summarizing is a large proportion of the total data – a seventh of the Canadian population lives in that circle.

If we were summarizing a smaller area, the results would not have been so good.

The TABLESAMPLE SYSTEM is a powerful tool, but you have to be sure that any given page has a random selection of the data you are sampling for. Our random normal example worked perfectly, because the data were perfectly random. A sample of time series data would not work well for sample time windows (the data were probably stored in order of arrival) but might work for sampling some other value.

by Paul Ramsey (Paul.Ramsey@crunchydata.com) at November 21, 2025 01:00 PM

November 14, 2025

Crunchy Data (Snowflake)

PostGIS Performance: Intersection Predicates and Overlays

In this series, we talk about the many different ways you can speed up PostGIS. A common geospatial operation is to clip out a collection of smaller shapes that are contained within a larger shape. Today let's review the most efficient ways to query for things inside something else.

Frequently the smaller shapes are clipped where they cross the boundary, using the ST_Intersection function.

The naive SQL is a simple spatial join on ST_Intersects.

SELECT ST_Intersection(polygon.geom, p.geom) AS geom

FROM parcels p

JOIN polygon

ON ST_Intersects(polygon.geom, p.geom);

When run on the small test area shown in the pictures, the query takes about 14ms. That’s fast, but the problem is small, and larger operations will be slower.

There is a simple way to speed up the query that takes advantage of the fact that boolean spatial predicates are faster than spatial overlay operations.

What?

- “Boolean spatial predicates” are functions like ST_Intersects and ST_Contains. They take in two geometries and return “true” or “false” for whether the geometries pass the named test.

- “Spatial overlay operations” are functions like ST_Intersection or ST_Difference that take in two geometries, and generate a new geometry based on the named rule.

Predicates are faster because their tests often allow for logical short circuits (once you find any two edges that intersect, you know the geometries intersect) and because they can make use of the prepared geometry optimizations to cache and index edges between function calls.

The speed-up for spatial overlay simply observes that, for most overlays there is a large set of features that can be added to the result set unchanged – the features that are fully contained in the clipping shape. We can identify them using ST_Contains.

Similarly, there is a smaller set of features that cross the border, and thus do need to be clipped. These are features that ST_Intersects but are not ST_Contains.

The higher performance function uses the faster predicates to filter the smaller shapes into two streams, one for intersection, and one for unchanged inclusion.

SELECT

CASE

WHEN ST_Contains(polygon.geom, p.geom) THEN p.geom

ELSE ST_Intersection(polygon.geom, p.geom)

END AS geom

FROM parcels p

JOIN polygon

ON ST_Intersects(polygon.geom, p.geom);

Two predicates are used here, the ST_Intersects in the join clause ensures that only parcels that might participate in the overlay are fed into the CASE statement, where the ST_Contains predicate no-ops the parcels that do not cross the boundary.

When run against our tiny example, the query executes in just 9ms. Amazing that the difference is large enough to measure on such a small example.

Using CASE statement to combine predicates and overlays

The core idea here is to recognize that boolean spatial predicates like ST_Contains and ST_Intersects are computationally much faster than spatial overlay operations like ST_Intersection. The standard, but slow, approach clips all intersecting features. The optimized method uses a CASE statement and ST_Contains check to create a shortcut: if a smaller geometry is entirely contained within the larger clipping polygon, we return the geometry unchanged (a quick no-op) and completely bypass the slower ST_Intersection calculation.

You can apply this optimization pattern to any PostGIS work involving clipping, spatial joins, or overlays where you suspect a significant number of features might be fully contained within a boundary. By filtering and partitioning your geometries into "fully contained" (fast path) and "crossing the border" (slow path) streams, you ensure the expensive overlay operations are only executed when they are strictly necessary to clip the edges.

Need more PostGIS?

Join us this year on November 20 for PostGIS Day 2025, a free, virtual, community event about open source geospatial!

by Paul Ramsey (Paul.Ramsey@crunchydata.com) at November 14, 2025 01:00 PM

November 12, 2025

PostGIS Development

PostGIS 3.6.1

The PostGIS Team is pleased to publish PostGIS 3.6.1. This is a bug fix release that includes bug fixes since PostGIS 3.6.0.

- This version requires PostgreSQL 12 - 18, Proj 6.1+, and GEOS 3.8+. To take advantage of all features, GEOS 3.12+ is needed.

- SFCGAL 1.4+ is needed to enable postgis_sfcgal support. To take advantage of all SFCGAL features, SFCGAL 2.2+ is needed.

November 06, 2025

Crunchy Data (Snowflake)

PostGIS Performance: Improve Bounding Boxes with Decompose and Subdivide

In the third installment of the PostGIS Performance series, I wanted to talk about performance around bounding boxes.

Geometry data is different from most column types you find in a relational database. The objects in a geometry column can be wildly different in the amount of the data domain they cover, and the amount of physical size they take up on disk.

The data in the “admin0” Natural Earth data range from the 1.2 hectare Vatican City, to the 1.6 billion hectare Russia, and from the 4 point polygon defining Serranilla Bank to the 68 thousand points of polygons defining Canada.

SELECT ST_NPoints(geom) AS npoints, name

FROM admin0

ORDER BY 1 DESC LIMIT 5;

SELECT ST_Area(geom::geography) AS area, name

FROM admin0

ORDER BY 1 DESC LIMIT 5;

As you can imagine, polygons this different will have different performance characteristics:

- Physically large objects will take longer to work with. To pull off the disk, to scan, to calculate with.

- Geographically large objects will cover more other objects, and reduce the effectiveness of your indexes.

Your spatial indexes are “r-tree” indexes, where each object is represented by a bounding box.

The bounding boxes can overlap, and it is possible for some boxes to cover a lot of the dataset.

For example, here is the bounding box of France.

What?! How is that France? Well, France is more than just the European parts, it also includes the island of Reunion, in the southern Indian Ocean, and the island of Guadaloupe, in the Caribbean. Taken together they result in this very large bounding box.

Such a large box makes a poor addition to the spatial index of all the objects in “admin0”. I could be searching in with a query key in the middle of the Atlantic, and the index would still be telling me “maybe it is in France?”.

For this testing, I have made a synthetic dataset of one million random points covering the whole world.

CREATE TABLE random_normal AS

SELECT id,

ST_Point(

random_normal(0, 180),

random_normal(0, 80),

4326) AS geom

FROM generate_series(0, 1000000) AS id;

CREATE INDEX random_normal_geom_x ON random_normal USING GIST (geom);

The un-altered bounds of “admin0”, the bounds that will be used to run the spatial join, look like this. Lots of overlap, lots of places where they bounds cover areas the polygons do not.

The baseline time to do a spatial join using the un-altered “admin0” data is 9 seconds.

SELECT Count(*), admin0.name

FROM admin0 JOIN random_normal

ON ST_Intersects(random_normal.geom, admin0.geom)

GROUP BY admin0.name;

What if, instead of joining against the raw “admin0” – which includes weird cases like France and a Canada with hundreds of islands – we first decompose every object into the singular polygons that make it up, using ST_Dump.

The decomposed objects cover far less ocean, and much more accurately represent the polygons they are proxying for. And the time – including the cost of decomposing the objects – to do a full join on the 1M points falls to 3.8 seconds.

WITH polys AS (

SELECT (ST_Dump(geom)).geom AS geom, name

FROM admin0

)

SELECT Count(*), polys.name

FROM polys JOIN random_normal

ON ST_Intersects(random_normal.geom, polys.geom)

GROUP BY polys.name;

There is still a lot of ocean being queried here, and also some of the polygons are not just very spatially large, but include a lot of vertices. What if we make the polygons smaller yet by chopping them up ST_Subdivide?

These bounds are almost perfect, they cover very little of the ocean, and they also have reduced the maximum memory size of any polygon to no more than 256 vertices. And the performance, even including the very expensive subdivision step, gets faster yet.

WITH polys AS (

SELECT ST_Subdivide(geom,128) AS geom, name FROM admin0

)

SELECT Count(*), polys.name

FROM polys JOIN random_normal

ON ST_Intersects(random_normal.geom, polys.geom)

GROUP BY polys.name;

The final query takes just 1.8 seconds, twice as fast as the simple boxes, and 4 times faster than a naive spatial join. For smaller collections of points, the naive approach can work as fast as the subdivision, but for this 1M point test set the overhead of doing the subdivision is still far less than the gains from using the more effective bounds.

Investing computation into creating better, smaller, and simpler geometries pays off significantly for large datasets by making the spatial index much more effective.

Need more PostGIS?

Join us this year on November 20 for PostGIS Day 2025, a free, virtual, community event about open source geospatial!

by Paul Ramsey (Paul.Ramsey@crunchydata.com) at November 06, 2025 01:00 PM

October 27, 2025

Auchindown

Trigger Happy: Live edits in QGIS

October 20, 2025

Crunchy Data (Snowflake)

PostGIS Performance: pg_stat_statements and Postgres tuning

In this series, we talk about the many different ways you can speed up PostGIS. Today let’s talk about looking across the queries with pg_stat_statements and some basic tuning.

Showing Postgres query times with pg_stat_statements

A reasonable question to ask, if you are managing a system with variable performance is: “what queries on my system are running slowly?”

Fortunately, PostgreSQL includes an extension called “pg_stat_statements” that tracks query performance over time and maintains a list of high cost queries.

CREATE EXTENSION pg_stat_statements;

Now you will have to leave your database running for a while, so the extension can gather up data about the kind of queries that are run on your database.

Once it has been running for a while, you have a whole table – pg_stat_statements – that collects your query statistics. You can query it directly with SELECT * or you can write individual queries to find the slowest queries, the longest running ones, and so on.

Here is an example of the longest running 10 queries ranked by duration.

SELECT

total_exec_time,

mean_exec_time,

calls,

rows,

query

FROM pg_stat_statements

WHERE calls > 0

ORDER BY mean_exec_time DESC

LIMIT 10;

While “pg_stat_statements” is good at finding individual queries to tune, and the most frequent cause of slow queries is just inefficient SQL or a need for indexing - see the first post in the series.

Occasionally performance issues do crop up at the system level. The most frequent culprit is memory pressure. PostgreSQL ships with conservative default settings for memory usage, and some workloads benefit from more memory.

Shared buffers

A database server looks like an infinite, accessible, reliable bucket of data. In fact, the server orchestrates data between the disk – which is permanent and slow – and the random access memory – which is volatile and fast – in order to provide the illusion of such a system.

When the balance between slow storage and fast memory is out of whack, system performance falls. When attempts to read data are not present in the fast memory (a “cache hit”), they continue on to the slow disk (a “cache miss”).

You can check the balance of your system by looking at the “cache hit ratio”.

SELECT

sum(heap_blks_read) as heap_read,

sum(heap_blks_hit) as heap_hit,

sum(heap_blks_hit) / (sum(heap_blks_hit) + sum(heap_blks_read)) as ratio

FROM

pg_statio_user_tables;

A result in the 99% is a good sign. Below 90% means that your database could be memory constrained, so increasing the “shared_buffers” parameter may help. As a general rule, “shared buffers” should be about 25% of physical RAM.

Working memory

Working memory is controlled by the “work_mem” parameter, and it controls how much memory is available for in-memory sorting, index building, and other short term processes. Unlike the “shared buffers”, which are permanent and fully allocated on startup, the “working memory” is allocated on an as-needed basis.

However, the working memory limit is applied for each database connection, so it is possible for the total working memory to radically exceed the “work_mem” value. If 1000 connections each allocate 100MB, your server will probably run out of memory.

You can speed up known memory-hungry processes, like building spatial indexes, by temporarily increasing the working memory available to your particular connection, then reduce it when the process is complete.

SET work_mem = '2GB';

CREATE INDEX roads_geom_x ON roads USING GIST (geom);

SET work_mem = '100MB';

The same principle holds for maintenance tasks, like the “VACUUM” command. You can speed up the maintenance of a large table by increasing the “maintenance_work_mem” temporarily.

SET maintenance_work_mem = '2GB';

VACUUM roads;

SET maintenance_work_mem = '128MB';

Parallelism

It is common for modern database servers to have multiple CPU cores available, but your PostgreSQL configuration may not be tuned to use them all. Postgres does have parallel query support. PostgreSQL is conservative about making use of multiple cores, because executing and coordinating multi-process queries has overheads, but in general large aggregations or scans can frequently make effective use of two to four cores at once.

Check what limits are set on your database.

SHOW max_worker_processes;

SHOW max_parallel_workers;

Setting the maximums to the number of cores on your server is good practice. In particular, don’t be afraid to reduce the number of workers if you have fewer cores – there is no benefit to be had in workers contending for cores.

Tuning Postgres basics

To wrap up:

- Check the slowest queries with pg_stat_statements.

- Use EXPLAIN and Indexing to experiment with improvements

- Check inefficient memory by looking at:

- shared buffers

- working memory (work_mem)

- parallelism

After you do some tuning, don’t forget to reset pg_stat_statements and check again to see if/how things have improved!

Need more PostGIS?

Join us this year on November 20 for PostGIS Day 2025, a free, virtual, community event about open source geospatial!

by Paul Ramsey (Paul.Ramsey@crunchydata.com) at October 20, 2025 01:00 PM

October 16, 2025

PostGIS Development

PostGIS 3.5.4

The PostGIS Team is pleased to release PostGIS 3.5.4.

This version requires PostgreSQL 12 - 18beta1, GEOS 3.8 or higher, and Proj 6.1+. To take advantage of all features, GEOS 3.12+ is needed. SFCGAL 1.4+ is needed to enable postgis_sfcgal support. To take advantage of all SFCGAL features, SFCGAL 1.5+ is needed.

- source download md5

- NEWS

- PDF docs: en

This release is a bug fix release that includes bug fixes since PostGIS 3.5.3.

October 10, 2025

Crunchy Data (Snowflake)

PostGIS Performance: Indexing and EXPLAIN

I am kicking off a short blog series on PostGIS performance fundamentals. For this first example, we will cover fundamental indexing.

We will explore performance using the Natural Earth “admin0” (countries) data (258 polygons) and their “populated places” (7342 points).

A classic spatial query is the “spatial join”, finding the relationships between objects using a spatial contain.

“How many populated places are there within each country?”

SELECT Count(*), a.name

FROM admin0 a

JOIN popplaces p

ON ST_Intersects(a.geom, p.geom)

GROUP BY a.name ORDER BY 1 DESC;

This returns an answer, but it takes 2200 milliseconds! For two such small tables, that seems like a long time. Why?

The first stop in any performance evaluation should be the “EXPLAIN” command, which returns a detailed explanation of how the query is executed by the database.

EXPLAIN SELECT Count(*), a.name

FROM admin0 a

JOIN popplaces p

ON ST_Intersects(a.geom, p.geom)

GROUP BY a.name;

Explain output looks complicated, but a good practice is to start from the middle (the most deeply nested) and work your way out.

QUERY PLAN

-------------------------------------------------------------------------

GroupAggregate (cost=23702129.78..23702145.38 rows=258 width=18)

Group Key: a.name

-> Sort (cost=23702129.78..23702134.12 rows=1737 width=10)

Sort Key: a.name

-> Nested Loop (cost=0.00..23702036.30 rows=1737 width=10)

Join Filter: st_intersects(a.geom, p.geom)

-> Seq Scan on admin0 a (cost=0.00..98.58 rows=258 width=34320)

-> Materialize (cost=0.00..328.13 rows=7342 width=32)

-> Seq Scan on popplaces p (cost=0.00..291.42 rows=7342 width=32)

The query plan includes a minimum and maximum potential cost for each step in the plan. Steps with large differences are potential bottlenecks. Our bottleneck is in the “nested loop” join, which is performing the spatial join.

- For each geometry in the admin0 table:

- Check every geometry in the popplaces table

- If it passes the join filter, keep it in the join

- Check every geometry in the popplaces table

This pattern of checking every potential intersection is a lot of work, even for our small tables. For 258 countries and 7342 places, it runs 1.8 million intersection tests!

Just as for a non-spatial join, the key to making this query efficient is adding an index. In this case, an index on the populated places geometry.

CREATE INDEX popplaces_geom_x ON popplaces USING GIST (geom);

The PostGIS spatial index is implemented as an “r-tree” using the GIST “access method”. The “r-tree” index algorithm is auto-tuning, so you do not need to fiddle with parameters to get the best index for your data.

Important! Do not forget to specify the GIST access method with the

USING GISTkeywords in your index creation. If you leave them out, you will build a default PostgreSQL b-tree index instead, and that will provide no speed-up at all for your spatial join.

Running with the index in place, the SQL is exactly the same.

SELECT Count(*), a.name

FROM admin0 a

JOIN popplaces p

ON ST_Intersects(a.geom, p.geom)

GROUP BY a.name;

But now it takes 200 milliseconds, 10 times faster! Why? The SQL has not changed, but thanks to the index, the query plan has changed.

QUERY PLAN

-----------------------------------------------------------------------------

HashAggregate (cost=4185.41..4187.99 rows=258 width=18)

Group Key: a.name

-> Nested Loop (cost=0.15..4176.73 rows=1737 width=10)

-> Seq Scan on admin0 a (cost=0.00..98.58 rows=258 width=34320)

-> Index Scan using popplaces_geom_x on popplaces p (cost=0.15..15.80 rows=1 width=32)

Index Cond: (geom && a.geom)

Filter: st_intersects(a.geom, geom)

The join is still a nested loop on the admin0 geometry, but instead of a sequence scan on the populated places, costing as much as 300, the inner loop is an index scan, costing only 15. As much as 20 times cheaper, resulting in our overall 10 times faster query time.

Join us this year on November 20 for PostGIS Day 2025, a free, virtual, community event about open source geospatial!

by Paul Ramsey (Paul.Ramsey@crunchydata.com) at October 10, 2025 02:00 PM

September 02, 2025

PostGIS Development

PostGIS 3.6.0

The PostGIS Team is pleased to release PostGIS 3.6.0! Best Served with PostgreSQL 18 Beta3 and recently released GEOS 3.14.0.

This version requires PostgreSQL 12 - 18beta3, GEOS 3.8 or higher, and Proj 6.1+. To take advantage of all features, GEOS 3.14+ is needed. To take advantage of all SFCGAL features, SFCGAL 2.2.0+ is needed.

-

Cheat Sheets:

This release includes bug fixes since PostGIS 3.5.3 and new features.

August 25, 2025

PostGIS Development

PostGIS 3.6.0rc2

The PostGIS Team is pleased to release PostGIS 3.6.0rc2! Best Served with PostgreSQL 18 Beta3 and recently released GEOS 3.14.0.

This version requires PostgreSQL 12 - 18beta3, GEOS 3.8 or higher, and Proj 6.1+. To take advantage of all features, GEOS 3.14+ is needed. To take advantage of all SFCGAL features, SFCGAL 2.2.0+ is needed.

-

Cheat Sheets:

This release is a beta of a major release, it includes bug fixes since PostGIS 3.5.3 and new features.

August 18, 2025

PostGIS Development

PostGIS 3.6.0rc1

The PostGIS Team is pleased to release PostGIS 3.6.0rc1! Best Served with PostgreSQL 18 Beta3 and soon to be released GEOS 3.14.

This version requires PostgreSQL 12 - 18beta3, GEOS 3.8 or higher, and Proj 6.1+. To take advantage of all features, GEOS 3.14+ is needed. To take advantage of all SFCGAL features, SFCGAL 2.2.0+ is needed.

-

Cheat Sheets:

This release is a beta of a major release, it includes bug fixes since PostGIS 3.5.3 and new features.

August 01, 2025

Auchindown

The Beauty of Extensibility

July 20, 2025

PostGIS Development

PostGIS 3.6.0rc1

The PostGIS Team is pleased to release PostGIS 3.6.0rc1! Best Served with PostgreSQL 18 Beta3 and soon to be released GEOS 3.14.

This version requires PostgreSQL 12 - 18beta3, GEOS 3.8 or higher, and Proj 6.1+. To take advantage of all features, GEOS 3.14+ is needed. To take advantage of all SFCGAL features, SFCGAL 2.2.0+ is needed.

-

Cheat Sheets:

This release is a beta of a major release, it includes bug fixes since PostGIS 3.5.3 and new features.

PostGIS Development

PostGIS 3.6.0beta1

The PostGIS Team is pleased to release PostGIS 3.6.0beta1! Best Served with PostgreSQL 18 Beta2 and soon to be released GEOS 3.14.

This version requires PostgreSQL 12 - 18beta2, GEOS 3.8 or higher, and Proj 6.1+. To take advantage of all features, GEOS 3.14+ is needed. To take advantage of all SFCGAL features, SFCGAL 2.2.0+ is needed.

-

Cheat Sheets:

This release is a beta of a major release, it includes bug fixes since PostGIS 3.5.3 and new features.

May 18, 2025

PostGIS Development

PostGIS 3.6.0alpha1

The PostGIS Team is pleased to release PostGIS 3.6.0alpha1! Best Served with PostgreSQL 18 Beta1 and GEOS 3.13.1.

This version requires PostgreSQL 12 - 18beta1, GEOS 3.8 or higher, and Proj 6.1+. To take advantage of all features, GEOS 3.12+ is needed. To take advantage of all SFCGAL features, SFCGAL 2.1.0+ is needed.

-

Cheat Sheets:

This release is an alpha of a major release, it includes bug fixes since PostGIS 3.5.3 and new features.

May 17, 2025

PostGIS Development

PostGIS 3.5.3

The PostGIS Team is pleased to release PostGIS 3.5.3.

This version requires PostgreSQL 12 - 18beta1, GEOS 3.8 or higher, and Proj 6.1+. To take advantage of all features, GEOS 3.12+ is needed. SFCGAL 1.4+ is needed to enable postgis_sfcgal support. To take advantage of all SFCGAL features, SFCGAL 1.5+ is needed.

-

PDF docs: en

-

Cheat Sheets:

This release is a bug fix release that includes bug fixes since PostGIS 3.5.1.

March 14, 2025

Crunchy Data (Snowflake)

Pi Day PostGIS Circles

What's your favourite infinite sequence of non-repeating digits? There are some people who make a case for e, but to my mind nothing beats the transcendental and curvy utility of π, the ratio of a circle's circumference to its diameter.

Drawing circles is a simple thing to do in PostGIS -- take a point, and buffer it. The result is circular, and we can calculate an estimate of pi just by measuring the perimeter of the unit circle.

SELECT ST_Buffer('POINT(0 0)', 1.0);

Except, look a little more closely -- this "circle" seems to be made up of short straight lines. What is the ratio of its circumference to its diameter?

SELECT ST_Perimeter(ST_Buffer('POINT(0 0)', 1.0)) / 2;

3.1365484905459406

That's close to pi, but it's not pi. Can we generate a better approximation? What if we make the edges even shorter? The third parameter to ST_Buffer() is the "quadsegs", the number of segments to build each quadrant of the circle.

SELECT ST_Perimeter(ST_Buffer('POINT(0 0)', 1.0, quadsegs => 128)) / 2;

3.1415729403671087

Much closer!

We can crank this process up a lot more, keep adding edges, but at some point the process becomes silly. We should just be able to say "this edge is a portion of a circle, not a straight line", and get an actual circular arc.

Good news, we can do exactly that! The CIRCULARSTRING is the curvy analogue to a LINESTRING wherein every connection is between three points that define a portion of a circle.

The circular arc above is the arc that starts at A and ends at C, passing through B. Any three points define a unique circular arc. A CIRCULARSTRING is a connected sequence of these arcs, just as a LINESTRING is a connected sequence of linear edges.

How does this help us get to pi though? Well, PostGIS has a moderate amount of support for circular arc geometry, so if we construct a circle using "natively curved" objects, we should get an exact representation of a circle rather than an approximation.

So, what is an arc that starts and ends at the same point? A circle! This is the unit circle -- a circle of radius one centered on the origin -- expressed as a CIRCULARSTRING.

SELECT ST_Length('CIRCULARSTRING(1 0, -1 0, 1 0)') / 2;

3.141592653589793

That looks a lot like pi!

Let's bust out the built-in pi() function from PostgreSQL and check to be sure.

SELECT pi() - ST_Length('CIRCULARSTRING(1 0, -1 0, 1 0)') / 2;

0

Yep, a perfect π to celebrate "Pi Day" with!

by Paul Ramsey (Paul.Ramsey@crunchydata.com) at March 14, 2025 02:00 PM

February 10, 2025

Paul Ramsey

The Early History of Spatial Databases and PostGIS

For PostGIS Day this year I researched a little into one of my favourite topics, the history of relational databases. I feel like in general we do not pay a lot of attention to history in software development. To quote Yoda, “All his life has he looked away… to the future, to the horizon. Never his mind on where he was. Hmm? What he was doing.”

Anyways, this year I took on the topic of the early history of spatial databases in particular. There was a lot going on in the ’90s in the field, and in many ways PostGIS was a late entrant, even though it gobbled up a lot of the user base eventually.

February 07, 2025

Crunchy Data (Snowflake)

Using Cloud Rasters with PostGIS

With the postgis_raster extension, it is possible to access gigabytes of raster data from the cloud, without ever downloading the data.

How? The venerable postgis_raster extension (released 13 years ago) already has the critical core support built-in!

Rasters can be stored inside the database, or outside the database, on a local file system or anywhere it can be accessed by the underlying GDAL raster support library. The storage options include S3, Azure, Google, Alibaba, and any HTTP server that supports RANGE requests.

As long as the rasters are in the cloud optimized GeoTIFF (aka "COG") format, the network access to the data will be optimized and provide access performance limited mostly by the speed of connection between your database server and the cloud storage.

TL;DR It Works

Prepare the Database

Set up a database named raster with the postgis and postgis_raster extensions.

CREATE EXTENSION postgis;

CREATE EXTENSION postgis_raster;

ALTER DATABASE raster

SET postgis.gdal_enabled_drivers TO 'GTiff';

ALTER DATABASE raster

SET postgis.enable_outdb_rasters TO true;

Investigate The Data

COG is still a new format for public agencies, so finding a large public example can be tricky. Here is a 56GB COG of medium resolution (30m) elevation data for Canada. Don't try and download it, it's 56GB!

You can see some metadata about the file using the gdalinfo utility to read the headers.

gdalinfo /vsicurl/https://datacube-prod-data-public.s3.amazonaws.com/store/elevation/mrdem/mrdem-30/mrdem-30-dsm.tif

Note that we prefix the URL to the image with /viscurl/ to tell GDAL to use virtual file system access rather than direct download.

There is a lot of metadata!

Driver: GTiff/GeoTIFF

Files: /vsicurl/https://datacube-prod-data-public.s3.amazonaws.com/store/elevation/mrdem/mrdem-30/mrdem-30-dsm.tif

Size is 183687, 159655

Coordinate System is:

PROJCRS["NAD83(CSRS) / Canada Atlas Lambert",

BASEGEOGCRS["NAD83(CSRS)",

DATUM["NAD83 Canadian Spatial Reference System",

ELLIPSOID["GRS 1980",6378137,298.257222101,

LENGTHUNIT["metre",1]]],

PRIMEM["Greenwich",0,

ANGLEUNIT["degree",0.0174532925199433]],

ID["EPSG",4617]],

CONVERSION["Canada Atlas Lambert",

METHOD["Lambert Conic Conformal (2SP)",

ID["EPSG",9802]],

PARAMETER["Latitude of false origin",49,

ANGLEUNIT["degree",0.0174532925199433],

ID["EPSG",8821]],

PARAMETER["Longitude of false origin",-95,

ANGLEUNIT["degree",0.0174532925199433],

ID["EPSG",8822]],

PARAMETER["Latitude of 1st standard parallel",49,

ANGLEUNIT["degree",0.0174532925199433],

ID["EPSG",8823]],

PARAMETER["Latitude of 2nd standard parallel",77,

ANGLEUNIT["degree",0.0174532925199433],

ID["EPSG",8824]],

PARAMETER["Easting at false origin",0,

LENGTHUNIT["metre",1],

ID["EPSG",8826]],

PARAMETER["Northing at false origin",0,

LENGTHUNIT["metre",1],

ID["EPSG",8827]]],

CS[Cartesian,2],

AXIS["(E)",east,

ORDER[1],

LENGTHUNIT["metre",1]],

AXIS["(N)",north,

ORDER[2],

LENGTHUNIT["metre",1]],

USAGE[

SCOPE["Transformation of coordinates at 5m level of accuracy."],

AREA["Canada - onshore and offshore - Alberta; British Columbia; Manitoba; New Brunswick; Newfoundland and Labrador; Northwest Territories; Nova Scotia; Nunavut; Ontario; Prince Edward Island; Quebec; Saskatchewan; Yukon."],

BBOX[38.21,-141.01,86.46,-40.73]],

ID["EPSG",3979]]

Data axis to CRS axis mapping: 1,2

Origin = (-2454000.000000000000000,3887400.000000000000000)

Pixel Size = (30.000000000000000,-30.000000000000000)

Metadata:

TIFFTAG_DATETIME=2024:05:08 12:00:00

AREA_OR_POINT=Area

Image Structure Metadata:

LAYOUT=COG

COMPRESSION=LZW

INTERLEAVE=BAND

Corner Coordinates:

Upper Left (-2454000.000, 3887400.000) (175d38'57.51"W, 68d 7'32.00"N)

Lower Left (-2454000.000, -902250.000) (121d27'11.17"W, 36d35'36.71"N)

Upper Right ( 3056610.000, 3887400.000) ( 10d43'16.37"W, 62d45'36.29"N)

Lower Right ( 3056610.000, -902250.000) ( 63d 0'39.68"W, 34d21' 6.31"N)

Center ( 301305.000, 1492575.000) ( 88d57'23.39"W, 62d31'56.78"N)

Band 1 Block=512x512 Type=Float32, ColorInterp=Gray

NoData Value=-32767

Overviews: 91843x79827, 45921x39913, 22960x19956, 11480x9978, 5740x4989, 2870x2494, 1435x1247, 717x623, 358x311

The key things we need to take from the metadata are that:

- the spatial reference system is "NAD83(CSRS) / Canada Atlas Lambert", "EPSG:3979"; and,

- the blocking (tiling) is 512x512 pixels.

Load The Database Table

With this metadata in hand, we are ready to load a reference to the remote data into our database, using the raster2pgsql utility that comes with PostGIS.

./raster2pgsql \

-R \

-k \

-s 3979 \

-t 512x512 \

-Y 1000 \

/vsicurl/https://datacube-prod-data-public.s3.amazonaws.com/store/elevation/mrdem/mrdem-30/mrdem-30-dsm.tif \

mrdem30 \

| psql raster

That is a lot of flags! What do they mean?

- -R means store references, so the pixel data is not copied into the database.

- -k means do not skip tiles that are all NODATA values. While it would be nice to skip NODATA tiles, doing so involves reading all the pixel data, which is exactly what we are trying to avoid.

- -s 3979 means that the projection of our data is EPSG:3979, the value we got from the metadata.

- -t 512x512 means to create tiles with 512x512 pixels, so that the blocking of the tiles in our database matches the blocking of the remote file. This should help lower the number of network reads any given data request requires.

- -Y 1000 means to use

COPYmode when writing out the tile definitions, and to write out batches of 1000 rows in eachCOPYblock. - Then the URL to the cloud GeoTIFF we are referencing, with

/vsicurl/at the front to indicate using the "curl virtual file system". - Then the table name (

mrdem30) we want to use in the database. - Finally we pipe the result of the command (which is just SQL text) to

psqlto load it into therasterdatabase.

When we are done, we have a table of raster tiles that looks like this in the database.

Table "public.mrdem30"

Column | Type | Nullable | Default

--------+---------+-----------+----------+--------------------------------------

rid | integer | not null | nextval('mrdem30_rid_seq'::regclass)

rast | raster | |

Indexes:

"mrdem30_pkey" PRIMARY KEY, btree (rid)

We should add a geometry index on the raster column, specifically on the bounds of each tile.

CREATE INDEX mrdem30_st_convexhull_idx

ON mrdem30 USING GIST (ST_ConvexHull(rast));

This index will speed up the raster tile lookup needed when we are spatially querying.

Query The Data

The single MrDEM GeoTIFF data set is now represented in the database as a table of raster tiles.

SELECT count(*) FROM mrdem30;

There are 112008 tiles in the collection.

Each tile is pretty big, spatially (512 pixels on a side, 30 meters per pixel, means a 15km tile).

Each tile knows what file it references, where it is on the globe and what projection it is in.

SELECT (ST_BandMetadata(rast)).*

FROM mrdem30 OFFSET 50000 LIMIT 1;

pixeltype | 32BF

nodatavalue | -32767

isoutdb | t

path | /vsicurl/https://datacube-prod-data-public.s3.amazonaws.com/store/elevation/mrdem/mrdem-30/mrdem-30-dsm.tif

outdbbandnum | 1

filesize | 59659542216

filetimestamp | 1718629812

The ST_ConvexHull() function can be used to get a polygon geometry of the raster bounds.

SELECT ST_AsText(ST_ConvexHull(rast))

FROM mrdem30 OFFSET 50000 LIMIT 1;

POLYGON((-2054640 -367320,-2039280 -367320,-2039280 -382680,-2054640 -382680,-2054640 -367320))

Just like geometries, raster tiles have a spatial reference id associated with them, in this case a projection that makes sense for a Canada-wide raster.

SELECT ST_SRID(rast)

FROM mrdem30 OFFSET 50000 LIMIT 1;

3979

Query Elevation

So how do we get an elevation value from this collection of reference tiles? Easy! For any given point, we pull the tile that point falls inside, and then read off the elevation at that point.

-- Make point for Toronto

-- Transform to raster coordinate system

WITH pt AS (

SELECT ST_Transform(

ST_Point(-79.3832, 43.6532, 4326),

3979) AS toronto

)

-- Find the raster tile of interest,

-- and read the value of band one (there is only one band)

-- at that point.

SELECT

ST_Value(rast, 1, toronto, resample => 'bilinear') AS elevation,

toronto AS geom

FROM

mrdem30, pt

WHERE ST_Intersects(ST_ConvexHull(rast), toronto);

Note that we are using "bilinear interpolation" in ST_Value(), so if our point falls between pixel values, the value we get is interpolated in between the pixel values.

Query a Larger Geometry

What about something bigger? How about the flight line of a plane going from Victoria (YYJ) to Calgary (YYC) over the Rocky Mountains?

- Generate the points

- Make a flight route to join them

- Transform that route into the coordinate system of the raster

- Pull all the rasters that touch the line and merge them into one giant raster in memory

- Copy the values off the raster into the Z coordinate of the line

- Dump the line into points to make a pretty picture

-- Create start and end points of route

-- YYJ = Victoria, YYC = Calgary

CREATE TABLE flight AS

WITH

end_pts AS (

SELECT ST_Point(-123.3656, 48.4284, 4326) AS yyj,

ST_Point(-114.0719, 51.0447, 4326) AS yyc

),

-- Construct line and add vertex every 10KM along great circle

-- Reproject to coordinate system of rasters

ln AS (

SELECT ST_Transform(ST_Segmentize(

ST_MakeLine(end_pts.yyj, end_pts.yyc)::geography,

10000)::geometry, 3979) AS geom

FROM end_pts

),

rast AS (

SELECT ST_Union(rast) AS r

FROM mrdem30, ln

WHERE ST_Intersects(ST_ConvexHull(rast), ln.geom)

),

-- Add Z values to that line

zln AS (

SELECT ST_SetZ(rast.r, ln.geom) AS geom

FROM rast, ln

),

-- Dump the points of the line for the graph

zpts AS (

SELECT (ST_DumpPoints(geom)).*

FROM zln

)

SELECT geom, ST_Z(geom) AS elevation

FROM zpts;

From the elevated points, we can make a map showing the flight line, and the elevations along the way.

Why does it work?

How is it possible to read the values off of a 56GB GeoTIFF file without ever downloading the file?

Cloud Optimized GeoTIFF

The difference between a "cloud GeoTIFF" and a "local GeoTIFF" is mostly a difference in how software accesses the data.

A local GeoTIFF probably resides on an SSD or some other storage that has fast random access. Small random reads will be fast, and so will large sequential reads. Local access is fast!

A cloud GeoTIFF resides on an "object store", a remote API that allows clients to real all of a file (with an HTTP "GET") or part of a file (with an HTTP "RANGE"). Each random read is quite slow, because the read involves setting up an HTTP connection (slow) and then transmitting the data over an internetwork (slow). The more reads you do, the worse performance get. So the core goal of a "cloud format" is to reduce the number of reads required to access a subset of the data.

Reading multi-gigabyte raster files from object storage is a relatively new idea, formalized only a couple years ago in the cloud optimized GeoTIFF (aka COG) specification.

The "cloud optimization" takes the form of just a few restrictions on the ordinary GeoTIFF:

- Pixel data are tiled

- Overviews are also tiled

Forcing tiling means that pixels that are near each other in space are also near each other in the file. Pixels that are near each other in the file can be read in a single read, which is great when you are reading from cloud object storage.

(Another "cloud format" shaking up the industry is Parquet, and Crunchy Data Warehouse can do direct access and query on Parquet for precisely the same reasons that postgis_raster can query COG files -- the format is structured to reduce the number of reads needed to carry out common queries.)

GDAL Virtual File Systems

While a "cloud optimized" format like COG or GeoParquet is cool, it is not going to be a useful cloud format without a client library that knows how to efficiently read the file. The client needs to be native to the application, and it needs to be parsimonious in the number of file accesses it makes.

For a web application, that means that COG access requires a JavaScript library that understands the GeoTIFF format.

For a database written in C, like PostgreSQL/PostGIS, that means that access requires a C/C++ library that understands GeoTIFF and abstracts file system operations, so that the GeoTIFF reader can support both local file system access and remote cloud access.

For PostGIS raster, that library is GDAL. Every build of postgis_raster is linked to GDAL and allows us to take advantage of the library capabilities.

GDAL allows direct access to COG files on remote cloud storage services.

- Any HTTP server that supports Range requests

- AWS S3

- Google Cloud Storage

- Azure Blob Storage

- and others!

The specific cloud service support allows things like access keys to be used for reading private objects. There is more information about accessing secure buckets with PostGIS raster in this blog post.

Under the covers GDAL not only reads COG format files, it also maintains a modest in-memory data cache. This means there's a performance premium to making sure your raster queries are spatially coherent (each query point is near the previous one) because this maximizes the use of cached data.

by Paul Ramsey (Paul.Ramsey@crunchydata.com) at February 07, 2025 03:30 PM

February 03, 2025

Paul Ramsey

WKB EMPTY

I have been watching the codification of spatial data types into GeoParquet and now GeoIceberg with some interest, since the work is near and dear to my heart.

Writing a disk serialization for PostGIS is basically an act of format standardization – albeit a standard with only one consumer – and many of the same issues that the Parquet and Iceberg implementations are thinking about are ones I dealt with too.

Here is an easy one: if you are going to use well-known binary for your serialiation (as GeoPackage, and GeoParquet do) you have to wrestle with the fact that the ISO/OGC standard for WKB does not describe a standard way to represent empty geometries.

Empty geometries come up frequently in the OGC/ISO standards, and they are simple to generate in real operations – just subtract a big thing from a small thing.

SELECT ST_AsText(ST_Difference(

'POLYGON((0 0, 1 0, 1 1, 0 1, 0 0))',

'POLYGON((-1 -1, 3 -1, 3 3, -1 3, -1 -1))'

))

If you have a data set and are running operations on it, eventually you will generate some empties.

Which means your software needs to know how to store and transmit them.

Which means you need to know how to encode them in WKB.

And the standard is no help.

But I am!

WKB Commonalities

All WKB geometries start with 1-byte “byte order flag” followed by a 4-byte “geometry type”.

enum wkbByteOrder {

wkbXDR = 0, // Big Endian

wkbNDR = 1 // Little Endian

};

The byte order flag signals which “byte order” all the other numbers will be encoded with. Most modern hardware uses “least significant byte first” (aka “little endian”) ordering, so usually the value will be “1”, but readers must expect to occasionally get “big endian” encoded data.

enum wkbGeometryType {

wkbPoint = 1,

wkbLineString = 2,

wkbPolygon = 3,

wkbMultiPoint = 4,

wkbMultiLineString = 5,

wkbMultiPolygon = 6,

wkbGeometryCollection = 7

};

The type number is an integer from 1 to 7, in the indicated byte order.

Collections

Collections are easy! GeometryCollection, MultiPolygon, MultiLineString and MultiPoint all have a WKB structure like this:

wkbCollection {

byte byteOrder;

uint32 wkbType;

uint32 numWkbSubGeometries;

WKBGeometry wkbSubGeometries[numWkbSubGeometries];

}

The way to signal an empty collection is to set its numGeometries value to zero.

So for example, a MULTIPOLYGON EMPTY would look like this (all examples in little endian, spaces added between elements for legibility, using hex encoding).

01 06000000 00000000

The elements are:

- The byte order flag

- The geometry type (6 == MultiPolygon)

- The number of sub-geometries (zero)

Polygons and LineStrings

The Polygon and LineString types are also very easy, because after their type number they both have a count of sub-objects (rings in the case of Polygon, points in the case of LineString) which can be set to zero to indicate an empty geometry.

For a LineString:

01 02000000 00000000

For a Polygon:

01 03000000 00000000

It is possible to create a Polygon made up of a non-zero number of empty linear rings. Is this construction empty? Probably. Should you make one of them? Probably not, since POLYGON EMPTY describes the case much more simply.

Points

Saving the best for last!

One of the strange blind spots of the ISO/OGC standards is the WKB Point. There is a standard text representation for an empty point, POINT EMPTY. But nowhere in the standard is there a description of a binary empty point, and the WKB structure of a point doesn’t really leave any place to hide one.

WKBPoint {

byte byteOrder;

uint32 wkbType; // 1

double x;

double y;

};

After the standard byte order flag and type number, the serialization goes directly into the coordinates. There’s no place to put in a zero.

In PostGIS we established our own add-on to the WKB standard, so we could successfully round-trip a POINT EMPTY through WKB – empty points are to be represented as a point with all coordinates set to the IEEE NaN value.

Here is a little-endian empty point.

01 01000000 000000000000F87F 000000000000F87F

And a big-endian one.

00 00000001 7FF8000000000000 7FF8000000000000

Most open source implementations of WKB have converged on this standardization of POINT EMPTY. The most common alternate behaviour is to convert POINT EMPTY object, which are not representable, into MULTIPOINT EMPTY objects, which are. This might be confusing (an empty point would round-trip back to something with a completely different type number).

In general, empty geometries create a lot of “angels dancing on the head of a pin” cases for functions that otherwise have very deterministic results.

- “What is the distance in meters between a point and an empty polygon?” Zero? Infinity? NULL? NaN?

- “What geometry type is the interesection of an empty polygon and empty line?” Do I care? I do if I am writing a database system and have to provide an answer.

Over time the PostGIS project collated our intuitions and implementations in this wiki page of empty geometry handling rules.

The trouble with empty handling is that there are simultaneously a million different combinations of possibilities, and extremely low numbers of people actually exercising that code line. So it’s a massive time suck. We have basically been handling them on an “as needed” basis, as people open tickets on them.

Other Databases

- SQL Server changes

POINT EMPTYtoMULTIPOINT EMPTYwhen generating WKB.SELECT Geometry::STGeomFromText('POINT EMPTY',4326).STAsBinary() 0x010400000000000000 - MariaDB and SnowFlake return NULL for a

POINT EMPTYWKB.SELECT ST_AsBinary(ST_GeomFromText('POINT EMPTY')) NULL

January 18, 2025

PostGIS Development

PostGIS 3.5.2

The PostGIS Team is pleased to release PostGIS 3.5.2.

This version requires PostgreSQL 12 - 17, GEOS 3.8 or higher, and Proj 6.1+. To take advantage of all features, GEOS 3.12+ is needed. SFCGAL 1.4+ is needed to enable postgis_sfcgal support. To take advantage of all SFCGAL features, SFCGAL 1.5+ is needed.

-

Cheat Sheets:

This release is a bug fix release that includes bug fixes since PostGIS 3.5.1.

January 06, 2025

Crunchy Data (Snowflake)

Running an Async Web Query Queue with Procedures and pg_cron

The number of cool things you can do with the http extension is large, but putting those things into production raises an important problem.

The amount of time an HTTP request takes, 100s of milliseconds, is 10- to 20-times longer that the amount of time a normal database query takes.

This means that potentially an HTTP call could jam up a query for a long time. I recently ran an HTTP function in an update against a relatively small 1000 record table.

The query took 5 minutes to run, and during that time the table was locked to other access, since the update touched every row.

This was fine for me on my developer database on my laptop. In a production system, it would not be fine.

Geocoding, For Example

A really common table layout in a spatially enabled enterprise system is a table of addresses with an associated location for each address.

CREATE EXTENSION postgis;

CREATE TABLE addresses (

pk serial PRIMARY KEY,

address text,

city text,

geom geometry(Point, 4326),

geocode jsonb

);

CREATE INDEX addresses_geom_x

ON addresses USING GIST (geom);

INSERT INTO addresses (address, city)

VALUES ('1650 Chandler Avenue', 'Victoria'),

('122 Simcoe Street', 'Victoria');

New addresses get inserted without known locations. The system needs to call an external geocoding service to get locations.

SELECT * FROM addresses;

pk | address | city | geom | geocode

----+----------------------+----------+------+---------

8 | 1650 Chandler Avenue | Victoria | |

9 | 122 Simcoe Street | Victoria | |

When a new address is inserted into the system, it would be great to geocode it. A trigger would make a lot of sense, but a trigger will run in the same transaction as the insert. So the insert will block until the geocode call is complete. That could take a while. If the system is under load, inserts will pile up, all waiting for their geocodes.

Procedures to the Rescue

A better performing approach would be to insert the address right away, and then come back later and geocode any rows that have a NULL geometry.

The key to such a system is being able to work through all the rows that need to be geocoded, without locking those rows for the duration. Fortunately, there is a PostgresSQL feature that does what we want, the PROCEDURE.

Unlike functions, which wrap their contents in a single, atomic transaction, procedures allow you to apply multiple commits while the procedure runs. This makes them perfect for long-running batch jobs, like our geocoding problem.

CREATE PROCEDURE process_address_geocodes()

LANGUAGE plpgsql

AS $$

DECLARE

pk_list BIGINT[];

pk BIGINT;

BEGIN

--

-- Find all rows that need geocoding

--

SELECT array_agg(addresses.pk)

INTO pk_list

FROM addresses

WHERE geocode IS NULL;

--

-- Geocode those rows one at a time,

-- one transaction per row

--

IF pk_list IS NOT NULL THEN

FOREACH pk IN ARRAY pk_list LOOP

PERFORM addresses_geocode(pk);

COMMIT;

END LOOP;

END IF;

END;

$$;

The important thing is to break the work up so it is done one row at a time. Rather than running a single UPDATE to the table, we find all the rows that need geocoding, and loop through them, one row at a time, committing our work after each row.

Geocoding Function

The addresses_geocode(pk) function takes in a row primary key and then geocodes the address using the http extension to call the Google Maps Geocoding API. Taking in the primary key, instead of the address string, allows us to call the function one-at-a-time on each row in our working set of rows.

The function:

- reads the Google API key from the environment;

- reads the address string for the row;

- sends the geocode request to Google using the http extension;

- checks the validity of the response; and

- updates the row.

Each time through the function is atomic, so the controlling procedure can commit the result as soon as the function is complete.

--

-- Take a primary key for a row, get the address string

-- for that row, geocode it, and update the geometry

-- and geocode columns with the results.

--

CREATE FUNCTION addresses_geocode(geocode_pk bigint)

RETURNS boolean

LANGUAGE 'plpgsql'

AS $$

DECLARE

js jsonb;

full_address text;

res http_response;

api_key text;

api_uri text;

uri text := '<https://maps.googleapis.com/maps/api/geocode/json>';

lat float8;

lng float8;

BEGIN

-- Fetch API key from environment

api_key := current_setting('gmaps.api_key', true);

IF api_key IS NULL THEN

RAISE EXCEPTION 'addresses_geocode: the ''gmaps.api_key'' is not currently set';

END IF;

-- Read the address string to geocode

SELECT concat_ws(', ', address, city)

INTO full_address

FROM addresses

WHERE pk = geocode_pk

LIMIT 1;

-- No row, no work to do

IF NOT FOUND THEN

RETURN false;

END IF;

-- Prepare query URI

js := jsonb_build_object(

'address', full_address,

'key', api_key

);

uri := uri || '?' || urlencode(js);

-- Execute the HTTP request

RAISE DEBUG 'addresses_geocode: uri [pk=%] %', geocode_pk, uri;

res := http_get(uri);

-- For any bad response, exit here, leaving all

-- entries NULL

IF res.status != 200 THEN

RETURN false;

END IF;

-- Parse the geocode

js := res.content::jsonb;

-- Save the json geocode response

RAISE DEBUG 'addresses_geocode: saved geocode result [pk=%]', geocode_pk;

UPDATE addresses

SET geocode = js

WHERE pk = geocode_pk;

-- For any non-usable geocode, exit here,

-- leaving the geometry NULL

IF js->>'status' != 'OK' OR js->'results'->>0 IS NULL THEN

RETURN false;

END IF;

-- For any non-usable coordinates, exit here

lat := js->'results'->0->'geometry'->'location'->>'lat';

lng := js->'results'->0->'geometry'->'location'->>'lng';

IF lat IS NULL OR lng IS NULL THEN

RETURN false;

END IF;

-- Save the geocode result as a geometry

RAISE DEBUG 'addresses_geocode: got POINT(%, %) [pk=%]', lng, lat, geocode_pk;

UPDATE addresses

SET geom = ST_Point(lng, lat, 4326)

WHERE pk = geocode_pk;

-- Done

RETURN true;

END;

$$;

Deploy with pg_cron

We now have all the parts of a geocoding engine:

- a function to geocode a row; and,

- a procedure that finds rows that need geocoding.

What we need is a way to run that procedure regularly, and fortunately there is a very standard way to do that in PostgreSQL — pg_cron.

If you install and enable pg_cron in the usual way, in the postgres database, new jobs must be added from inside the postgres database, using the cron.schedule_in_database() function to target other databases.

--

-- Schedule our procedure in the "geocode_example_db" database

--

SELECT cron.schedule_in_database(

'geocode-process', -- job name

'15 seconds', -- job frequency

'CALL process_address_geocodes()', -- sql to run

'geocode_example_db' -- database to run in

));

Wait, 15 seconds frequency? What if a process takes more than 15 seconds, won't we end up with a stampeding herd of procedure calls? Fortunately no, pg_cron is smart enough to check and defer if a job is already in process. So there's no major downside to calling the procedure fairly frequently.

Conclusion

- HTTP and AI and BI rollup calls can run for a "long time" relative to desired database query run-times.

- PostgreSQL

PROCEDUREcalls can be used to wrap up a collection of long running functions, putting each into an individual transaction to lower locking issues. pg_croncan be used to deploy those long running procedures, to keep the database up-to-date while keeping load and locking levels reasonable.

by Paul Ramsey (Paul.Ramsey@crunchydata.com) at January 06, 2025 02:30 PM

December 26, 2024

Crunchy Data (Snowflake)

Name Collision of the Year: Vector

I can’t get through a zoom call, a conference talk, or an afternoon scroll through LinkedIn without hearing about vectors. Do you feel like the term vector is everywhere this year? It is. Vector actually means several different things and it's confusing. Vector means AI data, GIS locations, digital graphics, and a type of query optimization, and more. The terms and uses are related, sure. They all stem from the same original concept. However their practical applications are quite different. So “Vector” is my choice for this year’s name collision of the year.

In this post I want to break down the vector. The history of the vector, how vectors were used in the past and how they evolved to what they are today (with examples!).

The original vector

The idea that vectors are based on goes back to the 1500s when René Descartes first developed the Cartesian coordinate XY system to represent points in space. Descartes didn't use the word vector but he did develop a numerical representation of a location and direction. Numerical locations is the foundational concept of the vector - used for measuring spatial relationships.

The first use of the term vector was in the 1840s by an Irish mathematician named William Rowan Hamilton. Hamilton defined a vector as a quantity with both magnitude and direction in three-dimensional space. He used it to describe geometric directions and distances, like arrows in 3D space. Hamilton combined his vectors with several other math terms to solve problems with rotation and three dimensional units.

The word Hamilton chose, vector, comes from the Latin word vehere meaning ‘to carry’ or ‘conveyor’ (yes, same origin for the word vehicle). We assume Hamilton chose this Latin word origin to emphasize the idea of a vector carrying a point from one location to another.

There’s a book about the history of vectors published just this year, and a nice summary here. I’ve already let Santa know this is on my list this year.

Mathematical vectors

Building upon Hamilton’s work, vectors have been used extensively in linear algebra pre and post computational math. If it has been 20 since you took a math class here’s a quick refresher.

Linear algebra is a branch of mathematics that focuses on vectors, matrices, and arrays of numbers. Here’s a super simple mathematical vector equation. We have two points on an XY coordinate system, point A at 1, 2 and B at 4,6. The vector formula for this is below in this diagram, final solution 3,4.

Linear algebra of much more complicated forms is used in solving systems of linear differential equations. Vector equations have practical use cases in physics and engineering for things we use every day like heat conduction, fluids, and electrical circuits.

Computer science vectors

Early computer scientists made heavy use of the vector in a variety of ways. A computational vector can be similar to the example above or even just a simple numeric array of fixed size with where the numbers have related values. In early computer programming, simple operations like additions or subtraction would be applied to a set of vectors.

A basic example of this could be financial portfolio analysis where you have two vectors: 1 - Portfolio weights, v1, showing the proportion of investment in different stocks and 2 - market impact adjustments, v2, that adjusts markets based on current values. This code sample here in C calculates the adjusted weights for each stock in the portfolio by adding the two vectors.

#include <stdio.h>

#define STOCKS 8

typedef float Portfolio[STOCKS];

int main() {

// Portfolio weights (in percentages, out of 100)

Portfolio portfolioWeights = {10.0, 20.0, 15.0, 25.0, 5.0, 10.0, 10.0, 5.0};

// Market impact adjustments (positive or negative percentages)

Portfolio marketAdjustments = {0.5, -0.3, 1.0, -0.5, 0.2, -0.1, 0.0, 0.7};

Portfolio adjustedWeights;

// Perform vector addition

for (int i = 0; i < STOCKS; i++) {

adjustedWeights[i] = portfolioWeights[i] + marketAdjustments[i];

}

// Print adjusted weights

printf("Adjusted Portfolio Weights: <");

for (int i = 0; i < STOCKS; i++) {

printf("%s%.1f%%", i > 0 ? ", " : "", adjustedWeights[i]);

}

printf(">\n");

return 0;

}

Modern computer science builds on similar concepts of organizing and processing collections. The std::vector in C++ and Vec<T> in Rust are general-purpose dynamic arrays. They can be virtually any data type to help manage or compute collections of elements.

Graphics and vectors

Vector graphics were used in early arcade and video game development. Think of something like Spacewar! or Asteroids. Vectors could be used to draw lines and shapes like ships and stars.

Here’s a super simple example of how vectors could be used to draw a triangle.

#define DrawLine(pt1, pt2)

typedef struct Point {

int x, y;

} Point;

typedef struct Line {

Point start;

Point end;

} Line;

Line lines[3] = {

{{0, 0}, {100, 100}}, // Line 1

{{100, 100}, {200, 50}}, // Line 2

{{200, 50}, {0, 0}} // Line 3

};

// Loop through these points to draw our triangle on the screen.

int main()

{

for (int i = 0; i < 3; i++)

{

DrawLine(lines[i].start, lines[i].end);

}

return 0;

}

These early xy arrays and computerized graphics paved the way for modern computer graphics which make use of vectors in even more advanced ways. When you play a modern 3D video game, many characters, objects, and movement you see on the screen are powered by linear algebra vectors.

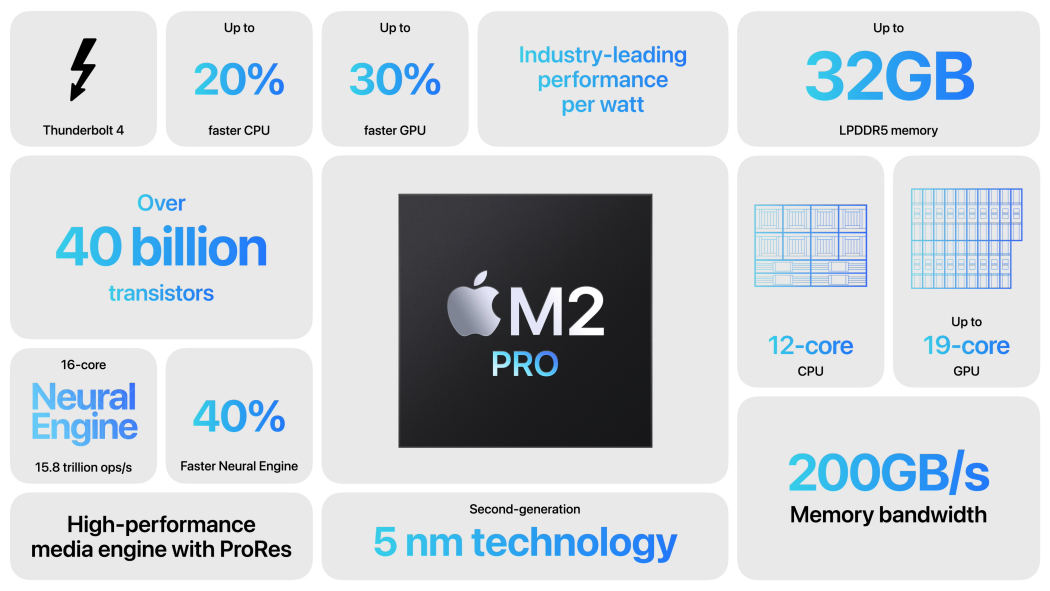

The Graphics Processing Unit (GPU) was a specialized computer developed in the 1990s and then improved on in the decades since. GPUs handle the millions of vector operations required to create 3D graphics in real time. GPUs now are used for far more than 3D graphics. Vector-based assembly operations can operate on a continuous block of memory, doing the same operation across different chunks of memory.

Scalable vector graphics (SVG)

SVGs are 2D vector graphics that have become a de-facto image format in web design and development. There’s a vector standard that allows svg graphics to be created with a series of numbers that represent shapes and paths that work across devices and web browsers. SVG graphics display logos, icons, charts, and animations. Their popularity took off in the mid 2010s and continues to grow as they remain popular due to their performance and lightweight nature.

SVGs use some number of vector numbers to describe the object they represent. For a simple SVG with a few shapes might be dozens of numbers. A more complex SVG like one for a detailed icon or map might include thousands of numbers.

Here’s what the SVG of the Crunchy Data hippo logo looks like:

<svg

id="aad9811e-aeeb-4dae-a064-7d889077489a"

data-name="Layer 6"

xmlns="http://www.w3.org/2000/svg"

viewBox="0 0 1407.15 1158.38"

>

<path

d="M553.21,651l124.3,122.4-154.9-89Zm-304.5-496.6-54.6,148.9L35.71,415.19,6.81,523.49l-6.5,67.9,83.1,65.2h0l208.7-10.3,114.1-155.7,3.6-166,199.3-200.5-104.7-41.9Zm0,0,360.4-30.3m-104.7-41.9-114.1,61.4-130.7,213.5-105.5,150.5-70.8,149m322.9-166-145.9-135.4-222.5,62.1M294.21,642l-140.1-135.1L1,586.39m36.1-171.2,116.3,91,190.8-73.1m-95.5-278.7L259.61,357m150.1-32.4-19.4-181m218.8-19.5,14.7,196.7-59.5,137.4-49.1,104-92.7,47.2-128.8,35.9,139.8,39.3L621.21,632l62.4-196.3,16.7-174.4-92.4-136.9M621.21,632l-215-141.5,26.7,194-349.6-28m617-395.2-294.1,229.3,215,141.5m-217.1,50.2,8.6,306.7-17.5,35.7,6.1,52.8,101.7-4.8,63.5-63.9,6-47.9L588.41,792h0l89.2-18.4,97.2,23.4,84.2,19.7-2.1,46.5,10.5,30.4-19,28.9,28.1,1.9,1.6-.8,6,105.5-15.1,40.1,25.3,88.7,132.1-33-6.1-50.6,65.5-306.8,49.5-12.2,57-43,29,41.1,2.4,88.3,5.8,61.8-18.6,46.2,23.5,38.7,96.5-12.4,44.3-43.5-21.1-28.8,13.8-216.9,4-65.5,34.6-116.4-23.4-120.4-332.8-215.1L842,135l-151.2,47.5m119.9,84.8-202.4-143.1m202.4,143.1L849,552.39l134.2-214.2ZM1164,453.09l-180.8-115-42.6,277Zm-486.5,320.4,263-158.4L849,552.39Zm133.2-506.2-110.6-4-4.6,48.5,115-42.3m-133,504-154.9-89,65.7,107.4Zm170.3-25.9,35.1,87,57.6-219.4Zm117.7,83.3-25-215.8-57.6,219.4Zm-24.9-215.8,25,215.8,120.2-63.5Zm12.7,418.8,94-83.9-81.9-119.1Zm-105.5-285.6-170.3,25.2,200,47.7ZM1164,453.09l-70.6,270.3,141.1-114Zm70.5,156.3,77.8-132.8L1195,262.89Zm-251.3-271.3,180.8,115,31.1-190.2Zm67.1-168.8-67.1,168.8,211.9-75.2ZM842,135l-151.2,47.5,359.5-13.9Zm244.2,633.2,7.2-44.8m167.2-63.1,51.8-183.7-77.9,132.8Zm0,0-26.1-50.9-99.3,145.8Zm0,0,84.1-88.7-32.4-95Zm84.1-88.7-84.1,88.7,42.4-7.6Zm-22.6-226.7-9.8,131.7,32.4,95Zm0,0,22.6,226.7,62-69Zm46.3,339.3-65.3-30.2,56.7,161.5Zm-114.7,122.3,77.3-31.9-28.1-121.8Zm49.2-153.7,28.1,121.8,28.9,40.9Zm69.3-32.3-27.5-48.9,23.7,112.6ZM1331,774.59l-4.7,123.7,33.6-82.7Zm-93.9,213.3,94.5-12.7-5.4-78.4Zm16.6-181.4-30,35.1,13.4,139.9,63.4-138.2Zm0,0-33.1-115.9,3.1,150.6Zm-32.8-115.2,82.2-37.2m-73.5,249.3,7.6,84.6m94.5-12.8,43.7-42.9-49.1-35.5Zm-5.8-79.2,29.1,7.3m-942.3,85.6-11.4,88.5,63.4-55.8Zm51.2,31.9,38.7,52.5,63.8-64.5Zm556,53.9-66.6-40.8-59.2,123.9Zm-431.6-282.8-112.2,70.4-11.4,159.3Zm-178.6,89.3,2.9,107.7,63.5-126.6Zm238-729.1,40.7-57.4L702,45.29l-13.6-32L650.11.49l-13.6,2.6-31.2,41.3-10.3,73,14.1,6.7ZM650,.49l-48.6,74.7,81.4-45.9Zm32.7,28.4L702,45.19m-19.1-15.3,5.5,64.8L647.31,110l-38.2,14.1m0,0-7.7-48.9m87-61.9-5.5,16.6L650,.59m-269.3,116-4.1-59.1-45-22.9-43.7,26.8,2.7,42.8,11.5,35.3M346.21,81l-14.6-46.5-41,69.7L346.21,81l-43.8,58.5m74.2-82.1L346.21,81l34.5,35.6m486.4,777.9,10.9,29m4.9-90.7-15.6,60.6,10.7,30.1Zm-407,32,46.7-180.3-112.9,196.7m23.2-196.6,89.7-.1,30.6-33.4M744.81,394l-10.6,113.9L849,552.39Zm-75.5,84.8L621.21,632l113.1-124.1Zm64.9,29.1-56.7,265.6m0,0,27.2-133.3-83.6-8.1Zm68.1-380.1-59.2,18m9-99.7,49.4,82.3,65.7-124.6Zm-289.2,178.9,277.3-54.9m200.3,594.7,31-31.4,50.7-168.1m-82.6,1.9,31.9,166.1,38.5,34.9M1331,774.59l-30.4,68.7,25.8,53.5M287.91,61.39l23.9,6.7"

fill="none"

stroke="currentColor"

stroke-linejoin="bevel"

/>

</svg>

GIS vector data

In modern computational GIS, vectors are used to represent geometric data types like points, line-strings, and polygons. Like any other x,y,z vector coordinate system the vectors refer to specific global points or objects. There’s quite a few different spatial reference systems that can be used. The vectors are typically stored in PostGIS using a binary format Well-Known Binary (WKB), which is a standardized binary encoding for geometries. Vectorization also powers many of the key functions in modern geospatial data processing like intersections, distance calculations, joins, and proximity analysis.

Here’s the vector binary for (imho) the best BBQ restaurant in the world:

restaurant_name | geom

-----------------+----------------------------------------------------

Gates Bar B Q | 0101000020E610000082E673EE76A557C007B47405DB884340

AI Vectors

AI vectors emerged from the mathematical and computational foundations of vectors that I covered above. Through advancements in hardware and in machine learning algorithms, vectors can be used as a system to describe virtually anything. Large Language Models (LLMs) convert data like text, images, or other inputs into vectors through a process called embedding. LLMs use layers of neural networks to process the embeddings in a specific context. So the vectors numerically represent relationships between objects within the context they were created with.

You’ve probably heard of the pgvector extension that is used for storing and querying AI related embedding data. pgvector adds a custom data type vector for storing fixed-length arrays of floating-point numbers. pgvector stores up to 16k dimensions.

My colleague Karen Jex has a great embedding talk she does about AI called “What’s the Opposite of a Corn Dog”. The vector embedding for a corn dog from an OpenAI menu dataset is an array of a staggering 1536 numbers. Here’s a snippet.

// vector of a Corn Dog

[0.0045576594,-0.00088141876,-0.014024569,-0.011641564,0.0038251784,0.010306821,-0.01265076,-0.013672978,-0.01582159,-0.041670028,0.0044274405,.........0.040185533,-0.010463083,0.004326521,-0.019571891,0.01853014,0.025770308,-0.017787892,0.0018572462]

In AI and machine learning, a vector is an ordered list of numbers that represents data for literally anything. Really what “AI” is doing is turning anything and everything into a vector and then comparing that vector with other vectors in the same matrix.

Vectorized queries

As the use of computational vectors have become so popular along with machine learning, the underlying methods and CPU hardware for processing vector data is now used to process other kinds of data.